Emerging Enterprise GenAI Developer Trends

Enterprise developers have been hard at work, crafting, experimenting, and innovating at a remarkable pace with LLMs and giving shape to its application layer

I’ve been diving into several recent reports on emerging developer trends within enterprises. One striking data point from a McKinsey survey shows that the adoption of Generative AI has surged—71% of organizations reported using it in at least one business function in 2024, up from just 33% in 2023. Amidst this rapid transformation, several key trends are taking shape. Here’s my synthesis of six that stand out:

Preferred LLM

Buy vs Build

Popular Use-case

Agentic Interoperability

Fine-tuning

Text vs Multi-modal

Need for RAG

Preferred cloud platforms

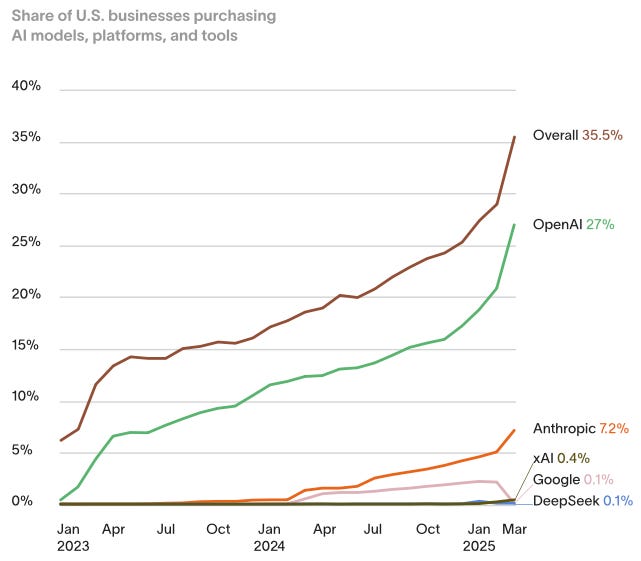

1. Preferred LLM - The status quo has not budged:

OpenAI is still the most used model provider, as per the Ramp AI Index report. This is followed by Anthropic, xAI, Google and DeepSeek. This would include both API as well as Enterprise subscriptions of their products.

This is not all that surprising given the momentum that OpenAI has with being the first in this space, complimented by Microsoft Azure Cloud’s distribution. Models are becoming increasingly commoditized, so this could change quickly in the future.

2. Buying is increasingly preferred over Build:

Deciding whether to buy or build Generative AI capabilities hinges on factors like speed to market (faster with buying), cost and ROI (building can be expensive and risky), available talent (scarce, favoring buying), data readiness and security needs, and the desire for differentiation (build for unique advantage). Read more in this KPMG blog.

The trend in 2025 is shifting towards buying or licensing GenAI solutions due to complexity. Companies are focusing on enterprise-ready platforms and gain initial experience through experimentation. While many will start by buying, the evolution will be towards customizing these solutions or building specific applications on top (a "buy then customize" hybrid model) to balance speed with tailored capabilities. Time-to-value is increasingly prioritized over pure cost, encouraging quicker adoption through purchasing foundational GenAI.

3. Marketing & Sales Use-cases Dominate:

McKinsey survey indicates that Marketing & Sales is where most enterprises are finding value of building Gen AI solutions. Another Stanford report suggests that companies are seeing highest revenue gains by using Gen AI in Marketing & Sales function of their org.

4. Agentic Tooling & Interoperability - MCP is catching on:

Another popular theme that has emerged lately is agentic interoperability. While its usage in enterprises is still unknown, but we know from the global developer community that MCP adoption has picked up—all major model providers (OpenAI, Google, Amazon, etc.) now support it, key features have been merged, and toolmakers are rallying around it as the standard interface between agents and tools.

MCP solves two core problems:

It gives LLMs the right context to handle unfamiliar tasks.

It replaces custom integrations with a clean, modular client-server model.

MCP makes tools composable and interoperable, turning standalone systems into building blocks for agent ecosystems. And since clients and servers are just logical roles, any agent can both consume and expose capabilities—e.g., a coding agent might pull GitHub issues while offering code analysis to others.

Google Cloud announced A2A agentic interoperability protocol that complements MCP. It empowers developers to build agents that connect with other agents. This will be an interesting space to watch out in 2025.

5. Fine-tuning is rare but catching up speed:

There’s no reliable data to indicate whether enterprises building GenAI apps internally opt for fine-tuning, but there is a general uptick in fine-tuning over time as the process of fine-tuning is much more streamlined now through providers such as Amazon Bedrock, GCP Vertex AI, Together AI, Cohere, etc. Together AI reported $100M in ARR, which is a strong indicator that companies building vertical agents might be opting to fine tune their models for specific products.

While fine-tuning standard models has its advantages, it’s also prone to have diminishing returns as newer and more capable models enter the marketplace. The other challenge is that most enterprises do not have the data for fine-tuning and the technical expertise to do it. RAG has been a great substitute in such cases. None the less, this will be an interesting and important area to watch out for.

6. Text is dominant, but multi-modal use of Gen AI increasing:

According to the McKinsey survey, 63% of respondents say their organizations use gen AI to generate text, with many also experimenting with images (over one-third) and code (over one-quarter). Tech sector firms report the widest range of outputs, while advanced industries more often use gen AI for images and audio.

7. Need for RAG is declining as context window length increases:

Here’s an interesting post by The Sequence on Substack, which indicates that vector databases’ popularity soared in 2023 and 2024 as developers started building Gen AI apps, but is being repositioned now that context windows are becoming larger and larger. While RAG is here to stay, it may not be as applicable to every problem as it once it used to be. Watch this video where Google Deepmind researcher talks about context window length:

8. Preferred Cloud Platforms/Services:

Enterprise GenAI development heavily relies on major cloud platforms: Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP). These hyperscalers lead the market, with Q1 2025 shares at 29% (AWS), 22% (Azure), and 12% (GCP), all showing strong AI-driven growth. They offer foundational models, specialized tools, and MLOps capabilities, becoming central hubs for GenAI innovation.

Comparative Strengths for GenAI:

AWS:

Key Services: Amazon Bedrock (diverse foundation models), SageMaker (full ML platform), Amazon Nova (foundational models), custom silicon (Trainium, Inferentia).

Strengths: Mature platform, vast services, cost optimization focus, scalable storage (S3), advanced networking, comprehensive MLOps in SageMaker, and GEP framework for democratizing GenAI.

Microsoft Azure:

Key Services: Azure AI Platform (including Azure AI Foundry), Azure OpenAI Service (access to OpenAI models like GPT-4), Copilot assistants.

Strengths: Strong enterprise foothold, seamless Microsoft ecosystem integration, robust hybrid cloud (Azure Arc), skilling initiatives, mature DevOps (Azure DevOps, GitHub Actions), and hybrid security excellence.

Google Cloud Platform (GCP):

Key Services: Vertex AI (access to Gemini, Imagen, Veo models), Google Kubernetes Engine (GKE) for AI, Tensor Processing Units (TPUs like Ironwood), Google Agentspace.

Strengths: AI research leadership (from Google AI), strong data analytics (BigQuery) and ML capabilities, AutoML, MLOps in Vertex AI, global network, real-time AI processing, and AI-driven threat detection.

Primary Sources: McKinsey Survey, Stanford